| The Andrew Johnston Namib Desert camouflage masterclass |

| The Andrew Johnston Namib Desert camouflage masterclass |

| The 2018 Photo Adventures Namibia Tour crosses the line | |

| Camera: Panasonic DC-G9 | Date: 28-11-2018 13:19 | Resolution: 3767 x 3767 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/500s | Aperture: 5.0 | Focal Length: 28.0mm | Lens: LUMIX G VARIO 12-35/F2.8 | |

Well, a line, anyway. We’ll all be back over the line sometime tomorrow, when we fly back. That’s sad.

From the left: Lee (group leader and owner of Photo Adventures), Ann, John B, Paul, Alison, Keith, Nigel, Tuhafenny (our excellent guide and driver from Wild Dog Safaris), John L, and yours truly.

| Shooting with twin Canons | |

| Camera: Panasonic DC-G9 | Date: 28-11-2018 08:52 | Resolution: 5184 x 2920 | ISO: 200 | Exp. bias: -33/100 EV | Exp. Time: 1/800s | Aperture: 5.0 | Focal Length: 16.0mm | Lens: LUMIX G VARIO 12-35/F2.8 | |

I noticed while gathering for the bushman walk that five of our group were "packing" a pair of Canons. This shot was inevitable.

Thanks to John B for the title – excellent photographer’s joke. I am happy to explain if required.

| Kalahari Bushmen | |

| Camera: Panasonic DC-G9 | Date: 28-11-2018 07:26 | Resolution: 3046 x 3046 | ISO: 200 | Exp. bias: -33/100 EV | Exp. Time: 1/1000s | Aperture: 5.5 | Focal Length: 59.0mm | Lens: LUMIX G VARIO 35-100/F2.8 | |

Having been out until gone 11pm doing the night photography, I boycotted the dawn shoot back in the quiver tree forest, had a bit of a lie in, and joined the party at breakfast. We then moved off north. On maps the B1 looks like a major road: Namibia’s main north-south artery. In practice it’s a fairly narrow single-carriageway road with a surface which has seen batter days, and our average speed was even lower than on the good road from Lüderitz. This adds insult to the potential injury that from where we joined the road to the next major town at Mariental is over 220km without even a gas station or loo stop!

Anyway, we did the trip without incident and only one emergency stop (just as well, as trees are also in short supply), and after Mariental turned east into the edge of the Kalahari Desert, ending up at another game reserve, Bagatelle. I have to say that for a "desert", that edge of the Kalahari is currently looking a lot greener than I expected, but apparently the rains this year were a bit later this year, and that region got more than usual.

We had a relaxed lunch, entertained by some meerkats, but unfortunately I didn’t have my camera. Towards sundown we set off for another game drive. This started well, with good views of kudu, springbok and giraffes as well as various birds. It was spoilt slightly when our driver panicked thinking he had lost his bird-recognition crib sheet, and insisted on turning round and driving back at break-neck speed along the route we had already covered, ignoring our instructions to calm down. (The missing sheet turned out to be behind his seat…). However after a couple of beers watching the sun go down I was reasonably mollified, and I’m quite pleased with the bird photos.

Lilac breasted roller

In the morning we joined a couple of Kalahari bushmen who walked us through the reserve to their camp, pointing out various tracks and giving us a demonstration of traditional hunting and trapping techniques. At the camp we met the wider family and got a chance, unique so far on this trip, to do some portraiture.

It was a little sad when we learned on the way back they actually live in a nice house attached to the lodge and carry the camp around in a Hilux, but I guess that’s progress…

| Quiver Trees | |

| Camera: Panasonic DC-G9 | Date: 26-11-2018 18:40 | Resolution: 3888 x 3888 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/125s | Aperture: 7.1 | Focal Length: 12.0mm | Lens: LUMIX G VARIO 12-35/F2.8 | |

Sadly we’re into the last few days of the trip and have to spend most of the next few days hacking back from the extreme south west of Namibia to Windhoek which is well to the north.

Monday started with a short walk around the very colourful town of Lüderitz, which is a Bavarian seaside town, if that’s not a massive conflict of metaphors… The short walk was then followed by one of the longest and most frustrating financial transactions I have experienced – trying to change £100 in the Standard Bank. This involved all sorts of ID checks, the young teller had obviously never seen British money before, and their counting machine refused to recognise one of my £20 notes, so I actually managed to change £90. In 20 minutes. Grr…

The long drive east was straightforward but surprisingly slow, with our driver obviously obeying some size-related limit which hadn’t been an issue on the unsurfaced roads. It’s more comfortable on tarmac, but not necessarily quicker.

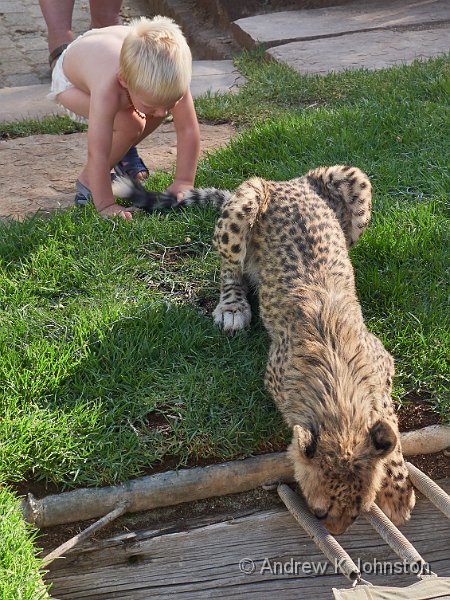

By mid afternoon we reached our overnight destination, the Quiver Tree Forest. These "trees" (they are actually giant succulents like cacti or aloes) are found in ones and twos all over Namibia but only grow in significant numbers in a few places. As well as the forest there are other attractions: we were just in time for feeding the rescued cheetahs, which I had expected to be caged in a compound but turned out to be wandering around with the farm’s dogs and toddlers. I got to stroke a cheetah, another personal first.

Go on, pull!

Sunset photographing the quiver trees was very enjoyable and generated some great images, and after dinner a few of us went back to try and capture the night sky with the trees as foreground. I’m not yet 100% convinced about my images, but it was an enjoyable experience nonetheless.

Night sky over the quiver tree forest

| Obelixa the brown hyena | |

| Camera: Panasonic DC-G9 | Date: 25-11-2018 11:48 | Resolution: 5184 x 3456 | ISO: 200 | Exp. bias: -33/100 EV | Exp. Time: 1/200s | Aperture: 6.3 | Focal Length: 300.0mm | Lens: LUMIX G VARIO 100-300/F4.0-5.6II | |

Today we visited two ghost towns based around diamond mines. In the morning we visited Elizabeth Bay, which is about half an hour from Lüderitz behind a substantial security screen as it shares its location and access road with an active diamond mine.

Elizabeth Bay is quite obviously an industrial site supported by worker accommodation and facilities, even though it is right next to the sea. The location is because diamond-rich sand was dropped as rivers reached the sea, and diamonds could be collected simply by washing and sieving the right seams of sand.

The town is in an advanced state of decay despite only having been abandoned in 1951 because the seaward bricks of each building are simply disintegrating under the onslaught of wind and salt spray, and then buildings collapse in turn.

The highlight for me was an encounter with a brown hyena. These shy and almost (by hyena standards, anyway) cute animals are endangered, and only about 2500 live on the Namibian coast. Some live near Elizabeth Bay, and when we arrived we met a BBC team who are making a documentary about them, using camera traps in the buildings.

Anyway, during my explorations I came face to face, on three occasions, with one hyena, who was completely unfazed and quite happy to be photographed. Our guide confirmed she is an elderly female known as Obelixa, who is habituated to humans, but it was still a fascinating encounter with a quiet, rare creature.

In the afternoon we explored Kolmanskoppe, which is much closer to Lüderitz, and a more straightforward tourist location. This is the source of another set of iconic Namibian images, abandoned mining buildings filled with sand. We had several hours to just wander and photograph at will. However it was quite hard work due to the constant strong wind and biting sand. At one point the eye sensor of my camera got so clogged it stopped working.

Image from Kolmanskoppe

While the fabric of most buildings at Kolmanskoppe is in better condition than at Elizabeth Bay, what seems to happen is any which is not actively maintained eventually loses a window or part of the roof to the onslaught, and then the sand rapidly pours in.

The sands around Kolmanskoppe may be a bit worse, but generally sand is a recurring theme of this trip. Just outside Kolmanskoppe there’s a road sign which says "sand". Without any loss of accuracy the Namibians could just put this outside the airport and have done with it.

Hopefully we will shortly be back at the hotel for a shower. Tomorrow I want to photograph Lüderitz, which is a pretty town, and not completely full of sand!

| Poly-tickle commentary | |

| Camera: Panasonic DC-G9 | Date: 24-11-2018 09:49 | Resolution: 2409 x 3213 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/500s | Aperture: 4.9 | Focal Length: 193.0mm | Lens: LUMIX G VARIO 100-300/F4.0-5.6II | |

Sorry it’s a bit fuzzy and not properly focused, but that’s nothing to do with my photography!

| Ostrich and oryx at Wolwedans | |

| Camera: Panasonic DC-G9 | Date: 24-11-2018 09:48 | Resolution: 3386 x 3386 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/640s | Aperture: 4.5 | Focal Length: 150.0mm | Lens: LUMIX G VARIO 100-300/F4.0-5.6II | |

Lee agreed that we could all have a lie-in, so of course I woke up at 4, and was just getting back to sleep at 6 when the sun rose over the mountains and shone straight into my room. Bugger…

Today we moved on from Wolwedans to Lüderitz, a "Bavarian" town on the coast, which meant most of the day on the road. Southern Namibia is staggeringly empty: it’s over 300km of well-graded road from Wolwedans to Aus, at the South-East corner of the Naukluft National Park, during which we passed two graders working on the road, but we think no other vehicles at all.

The new game to entertain myself is to build up playlists for our intended destination or attractions. We were promised a view of the wild horses at Aus, so I had to work to a "wild horse" theme. Obviously I started with Ride A Wild Horse by Dee Clark and Wild Horses by the Rolling Stones. I have two versions of Horse With No Name, by America and Paul Hardcastle / Direct Drive, both good and quite different, so they both got added. A search for "Wild" was fruitful, including Born to be Wild, Walk on the Wild Side and Wild Thing (I have the Trogg’s original, but sadly not the Hendrix version at Monterrey), and then several on a theme: Reap the Wild Wind, Ride the Wild Wind, Wild is the Wind (two versions, Bowie, but also Nina Simone which doesn’t really work in this playlist). Some of the others don’t quite work, but I never miss a chance to listen to Play That Funky Music (Wild Cherry), even if it’s cheating and slightly out of place.

Tomorrow it’s a "Diamonds and Ghosts" playlist, as we’re exploring the abandoned mining towns near Lüderitz.

We did get to see an ostrich on the way out of Wolwedans, but badly the horses at Aus were a bit of an anti-climax. We’ll have another look on the way back on Monday.

Predictably as we got nearer the coast the African sun disappeared and the weather got a lot colder and greyer. I’m now sitting in my hotel room in Lüderitz, with major breakers rolling straight off the Atlantic and breaking on rocks a few feet from my bedroom window. What this presages for sleep tonight I’m not quite sure: it may be quite restful, or it may be bloody annoying. Time will tell.

| Oryx and zebra at the watering hole | |

| Camera: Panasonic DC-G9 | Date: 23-11-2018 15:10 | Resolution: 4515 x 2822 | ISO: 400 | Exp. bias: -33/100 EV | Exp. Time: 1/640s | Aperture: 8.0 | Focal Length: 300.0mm | Lens: LUMIX G VARIO 100-300/F4.0-5.6II | |

We’ve been a bit spoiled by the game drives at Okonjima, where it was almost a challenge not to see a great variety of game. The Wolwedans equivalent was less productive: after 4 hours in the jeeps under a blazing sun we saw a lot of oryx, one solitary zebra, fleeting glances of a jackal and a fox (they really don’t like being anywhere near humans), and a dot on the hillside which my longest lens just about resolved to something ostrich-shaped.

On the way back the sun was steadily on the back of my neck and I was lucky not to get sunburnt. I can really recommend Coppertone Sport.

However, I really mustn’t grumble. The scenery is magnificent, the oryx are fun, and I’m still privileged to be here. Please enjoy another picture of an oryx:

Oryx sheltering from the sun. I could usefully have done the same thing!

| Dead tree at Wolwedans, Namib-Rand Game Reserve | |

| Camera: Panasonic DC-G9 | Date: 22-11-2018 18:58 | Resolution: 3888 x 3888 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/125s | Aperture: 8.0 | Focal Length: 46.0mm | Lens: LUMIX G VARIO 35-100/F2.8 | |

There’s a long-running joke between Frances and myself that I like to use a dead tree as foreground interest in my photos. In Namibia, it’s often the only viable target, and I’ve found that I’m in very good company. We all had a couple of goes at this one, first in poorer light, and then when the sun appeared from behind a cloud we got the Land Rover to reverse back down the track to have another go. I think it works…

| Sunset in Wolwedans, Namib-Rand Game Reserve | |

| Camera: Panasonic DC-G9 | Date: 22-11-2018 19:32 | Resolution: 5593 x 3495 | ISO: 640 | Exp. bias: 0 EV | Exp. Time: 1/60s | Aperture: 8.0 | Focal Length: 12.0mm | Lens: LUMIX G VARIO 12-35/F2.8 | |

My cunning plan to have a lie-in worked, and I had a great night’s sleep, sorted myself out, and had a leisurely breakfast. Those who had chosen the "third 4.15 start in a row" option got back looking distinctly frazzled.

The drive to our next location was mercifully quite short, as we were getting onto progressively more tricky unsurfaced roads. We’ve come to a private game reserve called Wolwedans (Vol-Ver-Dance). This is one of about half a dozen private owned reserves which together make up the Namib-Rand Game Reserve, a privately owned game preservation area over 2,000 km2 in area, or a bit bigger than the area inside the M25. Wolwedans has a total of 20-30 rooms split over 3 or 4 camps, and is usually frequented by the likes of Brad and Angelina, although I suspect they fly/flew in rather than taking the long road route. I’m not quite sure how we’ve managed to get here for a reasonable fee, but very grateful that someone’s made it work.

The topography is quite different to anything we’ve seen before, with a combination of large savannah areas, dunes, and quite substantial mountains particularly along the western edge where the reserve adjoins the Namib Desert National Park. While the terrain is obviously African, the "big skies" also put me in mind of Montana. So far we have had a very dramatic sunset and sunrise, and we’re off to try and track some game down later.

The drive back after sunset last night was interesting, with the drivers of the two Land Rovers opting to drive with lights off, relying on their night sight. It was quite peaceful, and probably avoided spooking the game (which seems to be a guiding rule here), but I suspect I would have used more light.

The Namibian diet (or at least the tourist version) is taking its toll on my waistline. Last night we only got to dinner at 9 and then had 5 courses (although the first three were only a couple of mouthfuls each). I couldn’t get into my green shorts today, so I just hope the other ones come back safely from the laundry… I suspect it’s going to be the apple and coffee diet for me when I get back.

| Tree at Deadvlei | |

| Camera: Panasonic DC-G9 | Date: 21-11-2018 07:06 | Resolution: 5224 x 2939 | ISO: 200 | Exp. bias: 0 EV | Exp. Time: 1/400s | Aperture: 6.3 | Focal Length: 38.0mm | Lens: LUMIX G VARIO 35-100/F2.8 | |

Deadvlei is the home of the iconic Namibian desert image: a dead tree on a salt plain with an orange dune in the background. Despite the ubiquity of such images, in practice it’s a single relatively small location, a bowl in the dunes maybe 500m x 200m. Hundreds of years ago it was a small oasis with fairly healthy vegetation, but the shifting dunes cut off its water supply, and the trees died. However in the dry, sterile conditions they have only decomposed very slowly, and are effectively now petrified. The other thing which is surprising is the salt pan – I was expecting a fairly thin even crust like you see in pictures of Bonneville, but instead it’s a rocky, lumpy and very solid arrangement.

Our tour bus took us the 70km down the Sossusvlei valley to the end of the surfaced road, and we then took a 4×4 shuttle 4km through the sands to the jumping off point for several walks. It’s about 1.1km to Deadvlei, a distance which I would normally knock off in about 12 minutes, but walking on the sand proves very difficult, and it took me over half an hour. My combination of small feet and, er, large frame means I just sink into the sand with every step, and it’s suspiciously like wading through treacle.

Regardless, our timing was good and the walk fully justified by the scene. We had timed our arrival to be there just as the sun was reaching into the bowl, and we got great shots of both trees just emerging from the shadows, and in full light against the orange dunes and cloudless blue sky.

We were just packing up to go back when we got the first hint of what was coming, some lines of sand being whipped across the salt, which stung the legs as they hit them. We had a brief respite as we walked back, but by the time we arrived at the car park we were in the middle of a full-blown dust storm, so bad at times other vehicles were invisible except for their lights. We had a 4km drive in an open 4×4 through this, which was not pleasant. I’m not sure that it was ever actually on my list, but "sandstorm" can now be ticked off.

We had a relaxing middle of the day, but I was starting to feel a bit weary and couldn’t face the walk into Deadvlei twice in one day, so at the end of the day while the rest of the group went back to Deadvlei John and I commandeered the 4×4 and went photographing dunes off the sand road. We got some decent shots, but it’s a challenge as the salty ground and scrub vegetation make getting a neat foreground a real challenge. I made a few "rookie errors", including shots out of focus and then trapping my finger in the car door, and decided that I really need to not do three 4.15 starts in a row. Tomorrow I’m going to boycott the dawn start and have a lie in…