Here are some facts ands figures about our trip, and some guidance for prospective travellers and photographers.

Cameras and Shot Count

I took around 2900 shots (broken down to 2788 on the Panasonic G9, 78 on the GX8, and a handful each on my phone, the Sony Rx100 and the infrared GX7). A fair proportion of these were for "multishot" images of various sorts, including 3D, focus blends, panoramas (especially at Wolwedans), HDR / exposure brackets (essential at Kolmanskoppe), and high-speed sequences (the bushmen demonstrations, and a few wildlife events). I’m on target for my usual pattern: about a third to half the raw images will be discarded quickly, and from the rest I should end up with around 200 final images worth sharing.

The G9 was the workhorse of the trip, and behaved well, although it did have a slight blip mid-trip when the eye sensor got clogged and needed to be cleaned. It’s battery life is excellent, frequently needing only one change even in a heavy day’s shooting, and the two SD card slots meant I never had to change a memory card during the day! The GX8 did its job as a backup and for when I wanted two bodies with different lenses easily to hand (the helicopter trip and a couple of the game drives). However it is annoying that two cameras which share so much technically have such different control layouts. If I was a "two cameras around the neck" shooter I would have to choose one or the other and get two of the same model. As I’ve noted before, my Panasonic cameras and the Olympus equivalents proved more usable on the helicopter trip than the "big guns", and if you’re planning such a flight then make sure you have a physically small option.

As notable as what I shot was what I didn’t. This trip generated no video, and the Ricoh Theta 360-degree camera which was always in my bag never came out of its cover. Under the baking African sun the infrared images just look like lower resolution black and white versions of the colour ones, and after a couple of attempts I didn’t bother with those, either.

This was the first trip in a while where I didn’t need to either fall back to my backup kit, or loan it out to another member of the group. One of the group did start off with a DOA Nikon body, somehow damaged in the flight out, but his other body worked fine. There was an incident where someone knocked his tripod over and broke a couple of filters, but the camera and lens were fine. Otherwise all equipment worked well. Maybe these things are getting tougher.

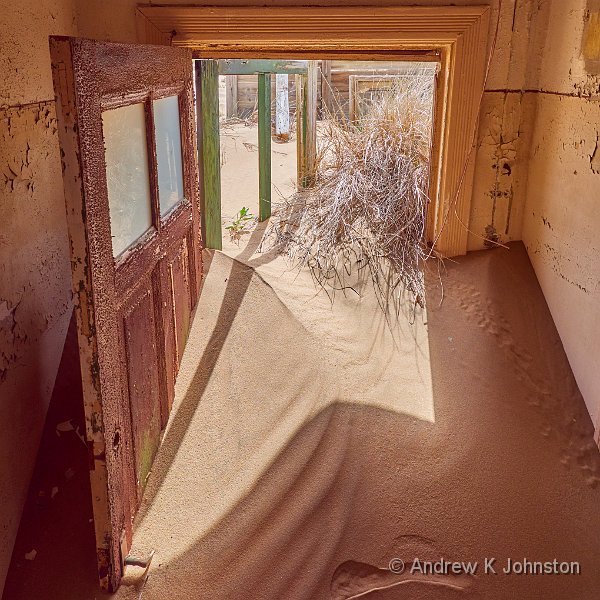

Namibia is absolutely full of sand, and there’s a constant fine dust in the air which is readily visible if you go out in the dark with a torch. This gets all over your kit especially if you go trekking through the dunes (tick), spend all afternoon bouncing through the savannah in an open jeep (tick), encounter a sandstorm (tick), or spend half a day in a ghost town world famous for its shifting sands (BINGO!!!). The ideal solution to remove the dust is a can of compressed air, but they really don’t like you taking one on a plane. On previous trips to dusty environments I’ve managed to get to a hardware store early on and buy a can, but that wasn’t possible this time. Squeezy rubber bulbs are worse than useless. In the end I just wiped everything down with wet wipes, but it’s not ideal. I’ve now found a powerful little USB blower (like a tiny hair drier) which may work, but I won’t be able to really test it until the next trip.

It’s a good practice to check your sensor at the end of every day, especially if like me you use a mirrorless camera usually with an electronic shutter (meaning the physical shutter is often open when you change lenses). I recently purchased a "Lenspen Sensor Klear" which is an updated version of the old "sensor scope" but with proper support for APS-C and MFT lens mounts. That was invaluable for the daily check, but in practice I didn’t find sensor dust to be a significant problem.

The subject matter is very much landscape and wildlife. Others may have different experiences, but I suggest for art, architecture, action and people you should look elsewhere.

Travel

Setting aside my complaints about the Virgin food service and the Boeing 787, the travel all worked well. The air travel got us to and from Windhoek without incident. Wild Dog Safaris provided the land transportation, with Tuhafenny an excellent, patient, driver/guide, and a behind the scenes team managing the logistics and local arrangements. The latter were mainly seamless and without issue, although there was a bit of juggling regarding some of the transport at Sossusvlei, and some of the departure airport transfers. I would certainly recommend Wild Dog Safaris.

If you want to cover anything like the sort of ground we did on a Namibia adventure, then you will spend a lot of time on the road. I reckon that on at least 7 days we spent 5 or more hours travelling, and on most of the others we probably managed 2+ on shorter hops or travelling to specific locations. According to Tuhafenny’s odometer we racked up 3218 km, or about 2000 miles, and that excludes the mileage in open 4x4s provided by the various resorts. The roads were at least empty and usually fairly straight and smooth, even those without tarmac, although the odd jolt and bump was inevitable. However we all managed to get some decent sleep while on the road, and I could dead-reckon our ETAs fairly accurately at 50mph, which is a far cry from the 10mph average I worked out for the Bhutan trip!

Although most locations have airstrips, there doesn’t seem to be any equivalent of the air shuttles which move people between centres in Myanmar, at least not unless you have vast funds for private charters. Just make sure you have a soft bottom and something to keep you entertained on the journeys.

Practicalities

I was advised beforehand travel to carry cash (Sterling) and change it in Namibia, on the same sort of basis as my Cuba, Bhutan and Myanmar trips. That was complete nonsense. In Namibia all the larger merchants happily take cards and there are ATMs in every town. Changing £200 at the airport was painless enough, but my attempt to change £90 in Lüderitz turned into one of the most annoying and convoluted financial transactions I have been involved in, and I’m tempted to include buying cars and houses in the list! Namibia hasn’t quite got to the point where you can just wave your phone at the till to buy an ice-cream, but it’s getting there quickly.

Another bit of complete nonsense is "it’s cold in the desert". Yes, it may be a bit chilly first thing some mornings, but I needed a second layer over my T-shirt for precisely two short pre-dawn periods. Obviously if you’re the sort of person who gets a chill watching a documentary about penguins, then YMMV, but I was clearly heavy a sweatshirt, a couple of pairs of long trousers and one raincoat. In addition to shorts and T-shirts one fleece, plus the jacket and trousers for the trip home, would be adequate.

On a related subject, there’s one thing that almost all the hotels got wrong. Apart from right at the coast daytime temperatures are up well into the 30s if not the 40s, and the temperature inside most of the lodgings at bed-time was in the high 20s, dropping to the low 20s by the end of the night (all temperatures in Celsius). In those temperatures I do NOT need a 50 Tog quilt designed for a Siberian Winter. One sheet would be plenty, with maybe the option of a second blanket if absolutely necessary. The government-run lodge at Sossusvlei got this right, no-one else did.

It may be dusty, and there are little piles of dung everywhere from the local wildlife, but beyond this Namibia is basically clean. You can drink the tap water pretty much everywhere, and it’s not a game of Russian Roulette having a salad. It made a welcome change from the experience of Morocco and my Asian trips not having to manage our journey around tummy upsets, which is just as well when we had at least two stretches of over 150 miles without an official stop. Obviously sensible precautions like regular hand cleansing apply, but Namibia really presents less of a challenge in this area.

The larger challenge of the Namibian diet is that there’s a lot of it. Portions tend to be large, and there’s a lot of red meat, frequently close relatives of the animals you have just been photographing. I was fine with this, but I suspect vegans should not apply. Between the food, the beer and snacks in the bus I definitely put on about half a stone, which I’m desperately trying to lose again before Christmas…

Communications are good in the larger towns, but elsewhere you may struggle for a mobile signal and the roaming costs for calls, texts and particularly data are very high. WiFi worked well at the town locations, but at the more remote sites service was intermittent and almost unusably slow. On the other hand, we were in the middle of Africa! This is one of those cases where you wonder not that a thing is done well, but that it is done at all. (The odd exception, again, was Sossusvlei, where they charged about £3 a day, but the bandwidth was excellent.) However Namibia is a country where practical problems get fixed, and I suspect in 5 years this will be a non-issue. In the meantime if you want to do anything more than check the news headlines (say, just for the sake or argument, update a photo blog :)) then plan ahead and batch updates ready for when you’re somewhere more central.

I did suffer one related annoyance. On a couple of occasions an Android app I was using to entertain myself on the long drives just stopped working pending a licensing check, which couldn’t be completed until I got connectivity at the end of the day. There’s not much to be done about this, apart from a post-incident moan to the app developer to make the check more forgiving. It’s worth having a Plan B for anything absolutely vital.

Do carry a small torch. It’s great to get away from light pollution, but the flipside is that it’s dark (shock, horror!!) As well as for night photography we often had to walk quite long distances between our accommodation and the resorts’ central areas, with minimal lighting, and you really don’t want to trip over a sleeping warthog or tread in a pile of oryx poo. I have a tiny, powerful cyclists’ head torch which is ideal. It’s also rechargeable via USB, although as far as I can remember it’s still on its first charge from when I bought it in 2015, so I’m not quite sure how that works.

Finally, retail therapy. Surprisingly for a country trying to optimise the income from high-value eco-tourism, there was almost nothing to buy until we got back to Windhoek and visited a craft market. Most resorts had a shop, but I wasn’t impressed by the merchandising, and when I did find something I liked it was usually not available in my size (clothing), or language (books). It’s not the purpose of the trip, but I do like the odd bit of retail therapy. There’s an opportunity for some enterprising young Namibians.

In summary, Namibia is a very civilised way to see the wild. Some of the wild is not quite as wild as it might be, but that’s part of the trade-off which makes it so accessible, and this certainly worked for me.

List

List Abstract

Abstract One+Abstract

One+Abstract

Thoughts on the World (Main Feed)

Thoughts on the World (Main Feed) Main feed (direct XML)

Main feed (direct XML)