Category Archives: Thoughts on the World

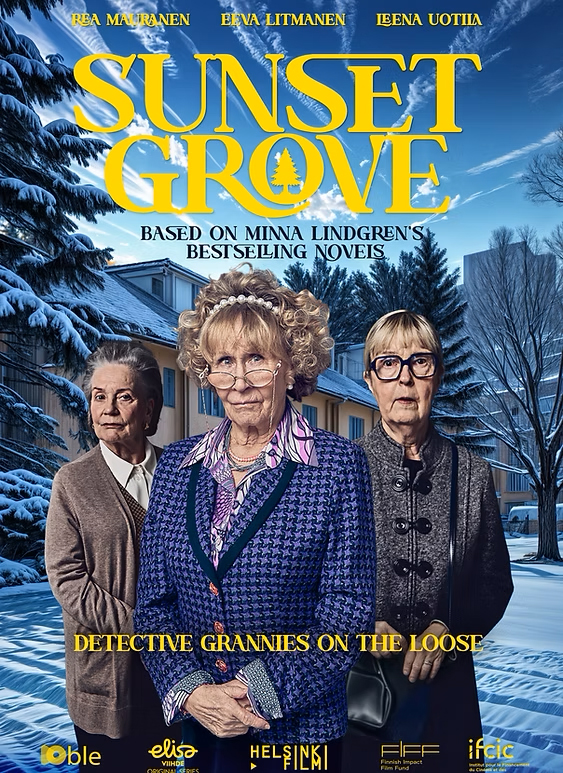

I can’t recommend this highly enough. Best “dangerous old codgers” comedy since RED. Last of the Summer Wine via Harlan Coben. If you’re in a tight spot and your support is a choice between Jack Bauer and the daft old Finnish ladies, don’t rush to a decision. Dark. Exciting. Hilarious!

Available in the UK on All4: https://www.channel4.com/programmes/sunset-grove

View featured image in Album

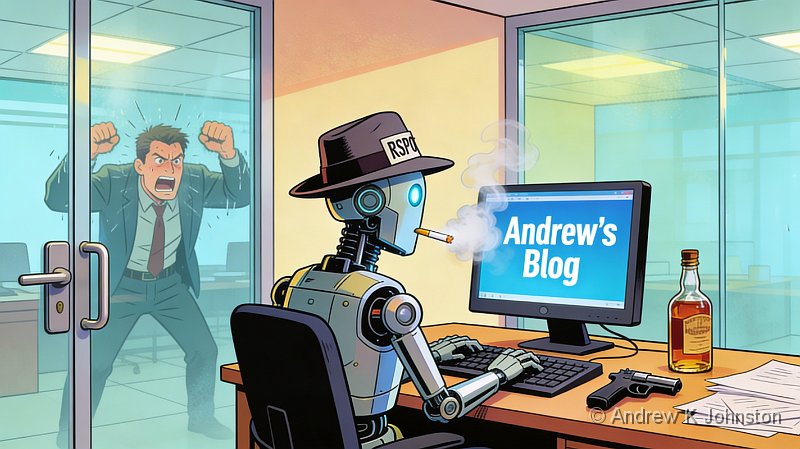

Are we becoming the feckless race from The Time Machine? Can we resist the temptation? Background This article is the result of convergence of two separate prompts. About a week ago I was bemoaning our increasing dependency on automation and Continue reading →

Friday, February 20, 2026 in

Thoughts on the World

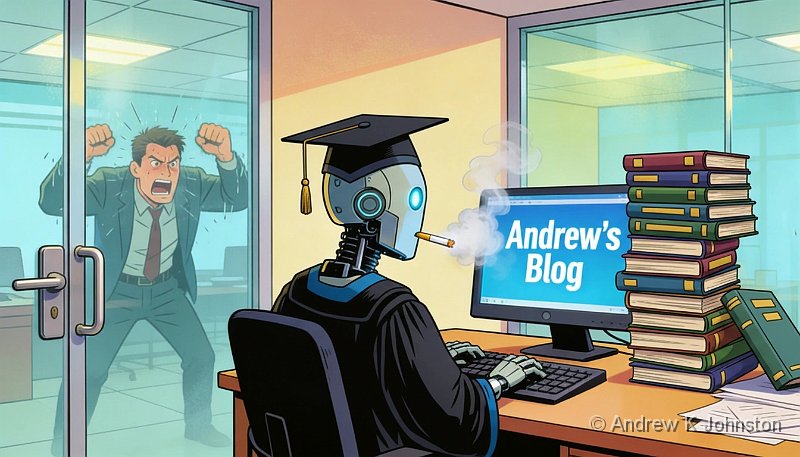

Introduction This is an appendix to a more complete article, see https://www.andrewj.com/blog/2026/are-we-becoming-the-eloi-the-academic-version/ I was recently bemoaning our increasing dependency on automation and our general inability to take charge and either fix or work around broken things, and I thought of Continue reading →

My photography mentor, Bob Kiss, recently posted an image of his, taken in Tuscany, of a Tuscan field scene shot through a window, with the light carefully balanced so that you can clearly see both the exterior, and the interior Continue reading →

Wednesday, February 11, 2026 in

Photography,

Thoughts on the World

I spent much of the last few years working on a large company’s Net Zero project, within which a significant element of my role was trying to educate people to understand electrical power and emissions calculations. It was hard enough Continue reading →

Warning: contains spoilers. I had been looking forward to Kathryn Bigelow’s new film for Netflix, A House of Dynamite. On the face of it this should be exactly our sort of film. Vantage Point, the 2008 film which shows an Continue reading →

Sunday, October 26, 2025 in

Reviews,

Thoughts on the World

Rescue, Don’t Replace One of the things which attracted us to our house about 30 years ago was a great feature: what is known as a “Chinese Circle” in the courtyard end wall, which provides a view into, from and Continue reading →

Excellent Example: Microsoft Visual Studio. You finish your work, and when you exit from Visual Studio, it prompts you with “Updates are available, would you like to install them now?”. There are Yes and Cancel (= defer to next time) Continue reading →

Saturday, September 20, 2025 in

Thoughts on the World

One of the great things about watching a lot of cop shows on television is the endless variety of mechanisms used to set up key characters. Recently we’ve had… The Island: female detective born on Harris returns there after several Continue reading →

Tuesday, March 25, 2025 in

Thoughts on the World

With Apologies to My Photography Tutors First, I’d like to apologise to all the authors, tutors, mentors and tour leaders who have tried to instil in me “correct” tripod technique. As they say, it’s not you, it’s me.I don’t particularly Continue reading →

Just how wrong can an AI get it? As part of my effort to profile the power consumption of GenAI, I decided to try and summarise one of my travel blogs using ChatGPT and the other big public models, plus Continue reading →

Tuesday, September 10, 2024 in

Thoughts on the World

Just how bleak can an AI’s world view become? One of my clients asked me to write an article on the environmental impact of generative AI. Like a lot of large corporations they are starting to embrace GenAI, but they Continue reading →

List

List Abstract

Abstract One+Abstract

One+Abstract

Thoughts on the World (Main Feed)

Thoughts on the World (Main Feed) Main feed (direct XML)

Main feed (direct XML)